LNAI 9605 Machine Learning for Health Informatics available

14.12.2016 LNAI 9605 just appeared

Machine Learning for Health Informatics Lecture Notes in Artificial Intelligence LNAI 9605

Holzinger, Andreas (ed.) 2016. Machine Learning for Health Informatics: State-of-the-Art and Future Challenges. Cham: Springer International Publishing, doi:10.1007/978-3-319-50478-0

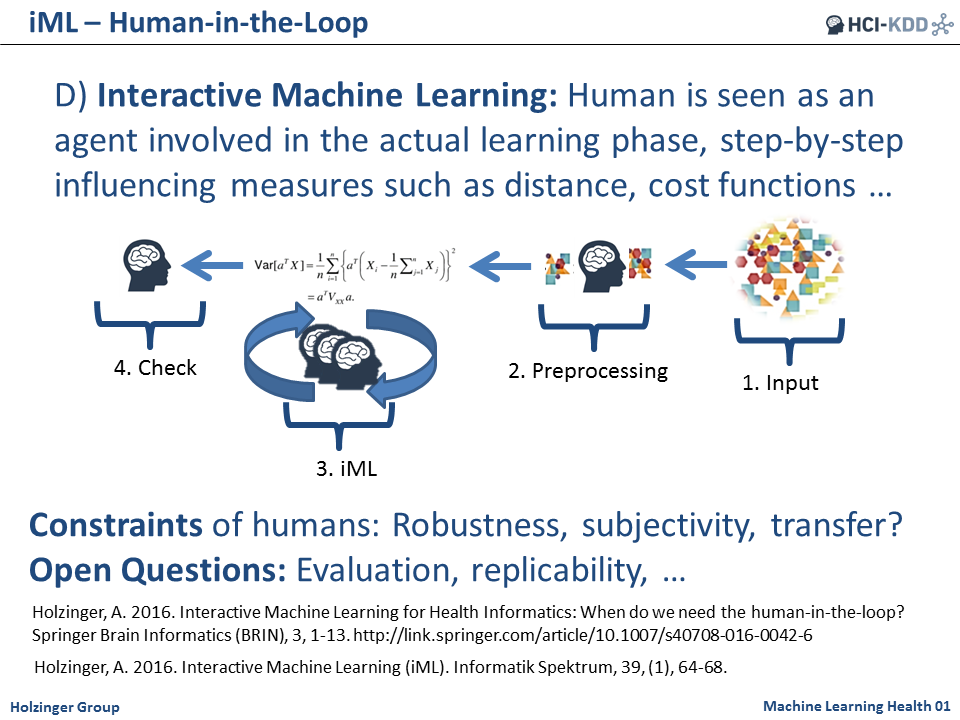

Machine learning (ML) is the fastest growing field in computer science, and Health Informatics (HI) is amongst the greatest application challenges, providing future benefits in improved medical diagnoses, disease analyses, and pharmaceutical development. However, successful ML for HI needs a concerted effort, fostering integrative research between experts ranging from diverse disciplines from data science to visualization.

Tackling complex challenges needs both disciplinary excellence and cross-disciplinary networking without any boundaries. Following the HCI-KDD approach, in combining the best of two worlds, it is aimed to support human intelligence with machine intelligence.

This state-of-the-art survey is an output of the international HCI-KDD expert network and features 22 carefully selected and peer-reviewed chapters on hot topics in machine learning for health informatics; they discuss open problems and future challenges in order to stimulate further research and international progress in this field.